Agentic AI: The Next Evolution in Artificial Intelligence for 2026

Artificial intelligence is changing fast. 2025 was the year AI agents left research labs. 2026 is when they actually show up in production systems. According to recent industry data, 40% of enterprise applications will include task-specific AI agents by year-end, up from under 5% in 2025. That’s not incremental growth. That’s a different way of thinking about automation.

Agentic AI is autonomous systems that perceive their environment, make decisions, and take actions to reach specific goals without constant human oversight. Traditional AI models respond to prompts. Agentic systems plan multi-step workflows, use tools, work with other agents, and adapt when conditions change. Gartner predicts that by 2028, 15% of routine work decisions will happen autonomously through agentic AI, creating roughly $450 billion in economic value.

Here’s the catch: only 2% of organizations have actually deployed AI agents at scale. The gap between what’s possible and what’s happening is enormous. This guide shows you how to understand, build, and deploy agentic AI systems using frameworks that work in real production environments.

Understanding agentic AI: beyond traditional models

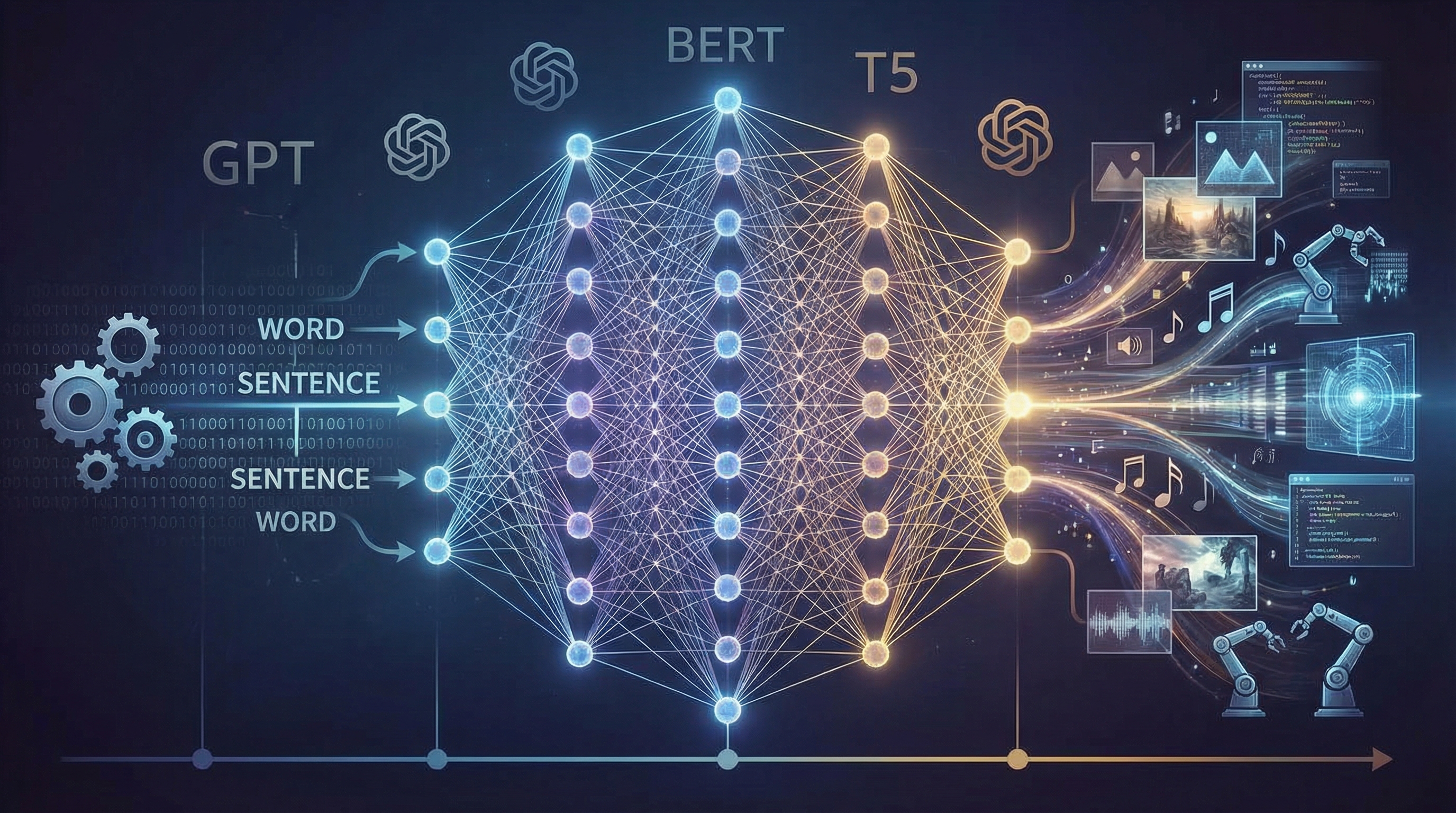

Traditional AI works in reactive mode. You give it input, it processes, it returns output. GPT-4 and Claude are excellent at this—generating text, answering questions, analyzing data based on what you ask. But they don’t have agency. They can’t independently pursue goals over time.

Agentic AI systems differ in three ways:

Autonomy and goal-directed behavior: Agents get high-level objectives and figure out the steps to accomplish them. A customer service agent doesn’t just answer one question. It researches account history, checks inventory systems, processes returns, and follows up with customers across multiple interactions.

Tool use and environment interaction: Agents call APIs, query databases, execute code, and interact with external systems. When a research agent needs current information, it searches the web. When a data analysis agent has a question, it writes and runs Python code to find answers.

Multi-step reasoning and planning: Agents break complex tasks into subtasks, execute them in sequence or parallel, and adjust based on intermediate results. This lets them handle workflows that previously needed human judgment at every step.

The shift from models to agents is like the evolution from calculators to computers. Calculators perform specific operations when told. Computers run programs that accomplish complex goals through sequences of operations. Agentic AI transforms language models from tools that respond to individual requests into systems that manage entire workflows.

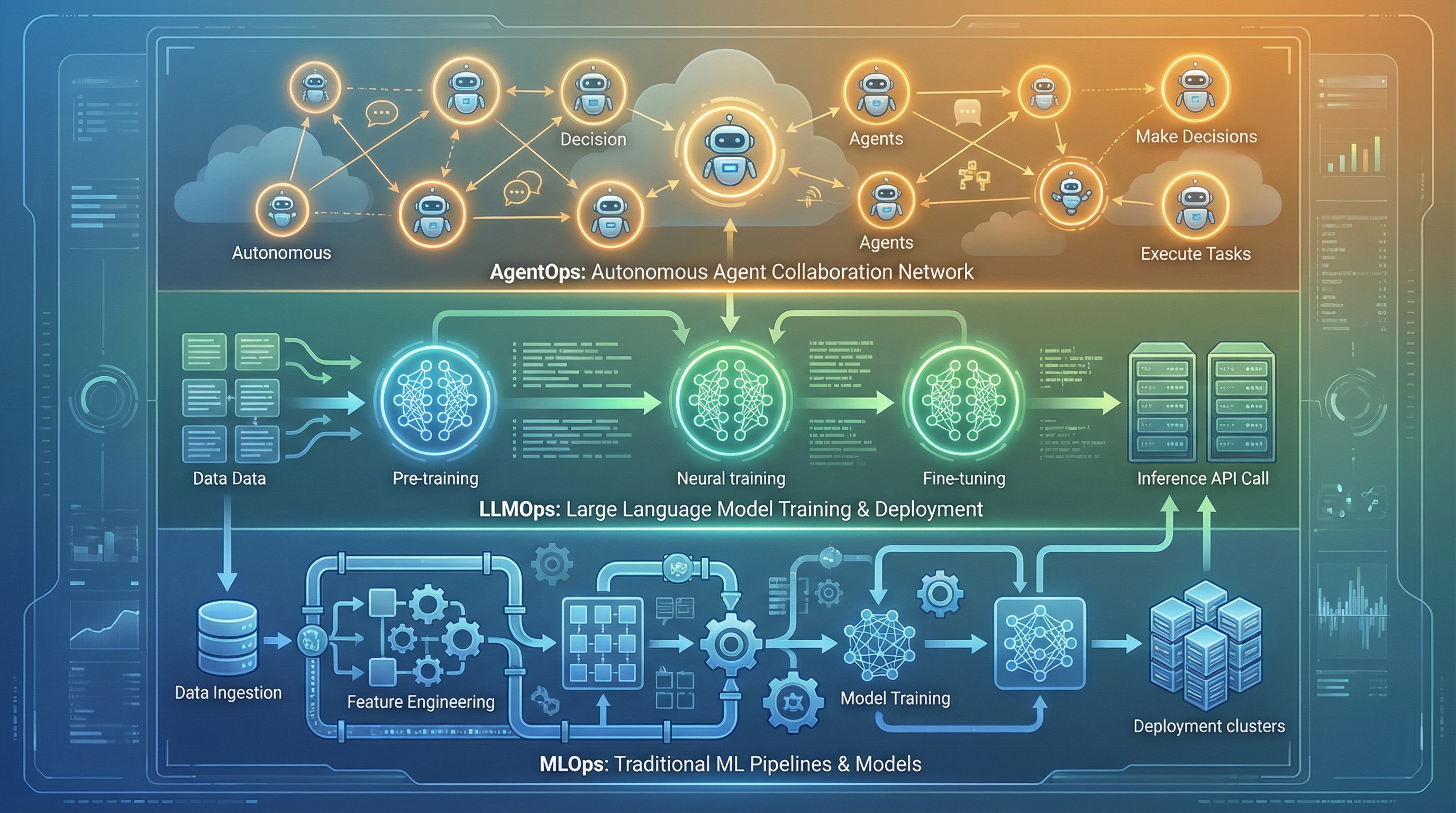

The evolution: MLOps to LLMOps to AgentOps

Understanding agentic AI means recognizing how AI operations evolved to support increasingly sophisticated systems.

MLOps: managing machine learning models

MLOps emerged to handle the challenges of deploying traditional ML models in production. Key practices include continuous integration and deployment for ML workflows, monitoring model performance over time, and automating training, testing, and deployment.

MLOps focuses on models that make predictions: fraud detection systems, recommendation engines, demand forecasting tools. These models are relatively static. They need periodic retraining but not real-time decision-making or complex interactions.

LLMOps: extending operations for language models

As large language models became integral to applications, MLOps principles adapted to their specific needs. LLMOps introduced fine-tuning pipelines for customizing pre-trained models, prompt management for crafting and refining prompts, and scalability solutions for managing the computational demands of large models.

LLMOps addresses challenges unique to language models: managing context windows, handling prompt injection attacks, monitoring for hallucinations, optimizing token usage. But LLMs still operate primarily in request-response patterns.

AgentOps: the operational backbone for intelligent agents

AgentOps is the next step, addressing the unique challenges of autonomous agents that make decisions, take actions, and interact with complex environments. Unlike static models, agents are dynamic entities that need new operational capabilities.

End-to-end observability: Agents must be observable at every execution stage. This includes tracking inputs and outputs, monitoring decision-making through reasoning logs, and analyzing how agents interact with external systems.

Traceable artifacts: Every action or decision must be traceable to its origin. This matters for debugging, compliance, and optimization. Decision logs capture what the agent decided and why. Version control tracks changes to agent configurations. Reproducibility ensures you can recreate any agent decision.

Advanced monitoring and debugging: Agents need specialized tools beyond traditional monitoring. RAG pipeline monitoring ensures agents retrieve accurate information. Prompt engineering tools enable iterative refinement. Workflow debuggers visualize multi-step processes.

The progression from MLOps to LLMOps to AgentOps reflects increasing autonomy and complexity. Each stage builds on the previous one while introducing new requirements for managing more sophisticated AI systems.

Building blocks: frameworks for agentic AI

Several frameworks have emerged as leaders for building production-ready agentic systems. Understanding their strengths helps you choose the right tool.

CrewAI: role-based agent teams

CrewAI works well when you need agents with distinct personalities and responsibilities. You assign each agent a role, goal, and backstory, like building an actual team. The framework handles task delegation, inter-agent communication, and state management without extensive boilerplate code.

Setting up basic workflows takes 15-30 minutes. CrewAI is good for startups and teams building collaborative systems where agents work together like human departments. A market research system might include a researcher agent that analyzes financial data, a writer agent that transforms insights into content, and a quality reviewer that checks accuracy.

LangGraph: complex workflow orchestration

LangGraph represents agents as nodes in a directed graph, enabling conditional logic, multi-team coordination, and hierarchical control with visual clarity. The graph-based approach makes debugging 60% faster in testing because you can see exactly where data flows and which agent made each decision.

Use LangGraph when you need fine-grained control over agent behavior or must maintain strict audit trails for regulated industries. The framework is particularly powerful for workflows with complex branching logic or multiple decision points.

Google Agent Development Kit (ADK)

ADK integrates deeply with the Google Cloud ecosystem, providing access to Gemini 2.5 Pro’s advanced reasoning and over 100 pre-built connectors. The framework powers agents inside Google products like Agentspace, giving you battle-tested infrastructure rather than experimental code.

Bidirectional streaming enables agents to handle real-time conversations without latency spikes. ADK is the natural choice for organizations already invested in Google Cloud or requiring enterprise-scale deployment with built-in security and compliance features.

Microsoft AutoGen: research and experimentation

AutoGen allows agents to communicate by passing messages in a loop, with each agent responding, reflecting, or calling tools based on its internal logic. The free-form collaboration makes it perfect for research scenarios where agent behavior requires experimentation, though it’s less suitable for production systems needing predictable outcomes.

Step-by-step tutorial: building your first multi-agent system

Let’s build a practical market research system using CrewAI. This example demonstrates core concepts while creating something immediately useful.

Step 1: environment setup

First, install the required dependencies:

pip install crewai crewai-tools langchain-community

Import the necessary modules:

from crewai import Agent, Task, Crew

from crewai_tools import tool

from langchain.tools import Tool

Step 2: define specialized agents

Create agents with distinct roles and expertise:

researcher = Agent(

role='Lead Financial Analyst',

goal='Uncover comprehensive insights about {company}',

backstory='''You are an expert financial analyst with 15 years

of experience analyzing market trends, financial statements, and

competitive landscapes. You excel at finding patterns in data and

identifying key business drivers.''',

tools=[search_tool, financial_data_tool],

verbose=True,

allow_delegation=False

)

writer = Agent(

role='Tech Content Strategist',

goal='Transform complex insights into engaging, accessible content',

backstory='''You are a skilled content strategist who specializes

in making technical and financial information understandable for

business audiences. You know how to structure information for

maximum impact.''',

verbose=True,

allow_delegation=False

)

quality_reviewer = Agent(

role='Quality Assurance Specialist',

goal='Ensure accuracy and consistency of all outputs',

backstory='''You are a meticulous reviewer with expertise in

fact-checking and quality control. You catch inconsistencies,

verify claims, and ensure professional standards.''',

verbose=True,

allow_delegation=True

)

Step 3: create custom tools

Agents need tools to interact with external systems:

@tool

def search_web(query: str) -> str:

"""Search the web for current information about companies and markets"""

# Implementation using search API

results = perform_search(query)

return format_search_results(results)

@tool

def analyze_financials(company: str) -> str:

"""Retrieve and analyze financial data for a company"""

# Implementation using financial data API

data = fetch_financial_data(company)

return analyze_metrics(data)

Step 4: define tasks and workflows

Specify what each agent should accomplish:

research_task = Task(

description='''Research {company} and provide a comprehensive analysis

including: financial performance over the past 3 years, market position

and competitive landscape, recent news and developments, growth trends

and future outlook.''',

expected_output='''A detailed research report with key metrics, trends,

and insights formatted in clear sections with supporting data.''',

agent=researcher

)

writing_task = Task(

description='''Using the research findings, create an engaging 800-word

article that explains the company's position and prospects for a business

audience. Focus on key insights and implications.''',

expected_output='''An 800-word article ready for publication with clear

structure, compelling narrative, and actionable insights.''',

agent=writer,

context=[research_task]

)

review_task = Task(

description='''Review the article for accuracy, consistency, and quality.

Check that all claims are supported by the research. Suggest improvements

if needed.''',

expected_output='''A quality assessment with approval or specific

improvement recommendations.''',

agent=quality_reviewer,

context=[research_task, writing_task]

)

Step 5: orchestrate the crew

Bring agents and tasks together:

crew = Crew(

agents=[researcher, writer, quality_reviewer],

tasks=[research_task, writing_task, review_task],

process='sequential', # Tasks execute in order

verbose=True

)

# Execute the workflow

result = crew.kickoff(inputs={'company': 'Tesla'})

print(result)

Step 6: add error handling and monitoring

Production systems need robust error handling:

import logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

try:

result = crew.kickoff(inputs={'company': company_name})

logger.info(f"Successfully completed analysis for {company_name}")

return result

except Exception as e:

logger.error(f"Error processing {company_name}: {str(e)}")

# Implement fallback logic or human escalation

raise

This basic system demonstrates core agentic AI concepts: specialized agents with distinct roles, tool use for external interactions, multi-step workflows with dependencies, and coordination between agents. You can extend this foundation by adding more agents, implementing parallel execution, or integrating with enterprise systems.

Real-world applications transforming industries

Agentic AI is already delivering measurable value across sectors.

Supply chain optimization: Multi-agent systems coordinate procurement, logistics, inventory, and customer updates in real-time. Traditional supply chains relied on manual handoffs taking hours or days. Agentic systems compress this to real-time responses, collectively rerouting shipments, flagging risks, and adjusting expectations within seconds.

Healthcare diagnostics: In hospitals, one agent monitors patient vitals from IoT devices, another processes lab reports, and a third analyzes symptoms to suggest diagnoses. Together, these agents provide real-time views of patient health, helping doctors make faster, more informed decisions.

Financial services: In algorithmic trading, agents analyze datasets, detect market patterns, and execute trades faster than humans. One agent monitors stock prices, another evaluates risk levels, and a third executes orders automatically. Similar systems handle fraud detection, continuously analyzing transactions and identifying suspicious activities.

Smart cities: Traffic agents control signal lights based on real-time congestion data, energy agents balance electricity loads across grids, and environmental agents monitor air quality. This coordination enables cities to respond quickly to changing conditions, reducing congestion and improving quality of life.

Drug discovery: Research organizations like Genentech have built agent ecosystems that automate complex workflows. Scientists focus on breakthrough discoveries while agents handle data processing, literature reviews, and experimental design. Systems coordinate 10+ specialized agents, each expert in molecular analysis, regulatory compliance, or clinical trial design.

Customer service automation: Modern customer service platforms deploy multiple agents working together. One agent handles intent recognition and routing, another accesses customer data and order history, a third processes refunds or exchanges, and a fourth manages follow-up communications. This coordination reduces average resolution time from hours to minutes while maintaining context across multiple interactions. Companies report 30-40% reduction in support costs while improving customer satisfaction scores.

Best practices and common challenges

Deploying agentic AI successfully means addressing several challenges.

Start small and scale gradually

Begin with 2-3 agents solving one specific problem. Prove value before expanding to complex workflows. Organizations seeing ROI started with low-risk use cases like document processing or data validation, then scaled after demonstrating measurable improvements.

Design for observability

Build comprehensive logging from day one. Track which agent handled each decision and why, monitor performance metrics per agent, and store conversation history for debugging. You can’t fix what you can’t see.

Implement governance early

By 2027, 40% of agentic AI projects will fail due to inadequate risk controls. Set clear operational limits for each agent, define which actions require human approval, create audit trails showing every decision, and test failure scenarios regularly.

Manage costs effectively

Multi-agent systems can consume 15× more API tokens than single agents while delivering 90% better performance. Match model size to task complexity. Simple validation runs on smaller models, saving costs for complex reasoning. Implement caching for repeated queries and monitor token usage per agent with budget limits.

Handle agent conflicts

When agents have competing objectives, implement priority frameworks establishing which goals take precedence, negotiation protocols allowing agents to propose compromises, and human escalation for conflicts the system can’t resolve.

Optimize team size

Keep teams small: 3-7 agents per workflow. Below three, you’re probably fine with a single agent. Above seven, coordination complexity outweighs benefits unless you use hierarchical structures with team leaders managing subgroups.

The future of agentic AI

The shift to agentic systems is redesigning how work flows through organizations. As Kate Blair from IBM notes, “2026 is when these patterns are going to come out of the lab and into real life.”

Emerging trends include self-observing agents capable of monitoring their own actions, standardized protocols for event tracing and operational controls, and inter-agent collaboration frameworks enabling communication when multiple agents work together.

The infrastructure needed for coordinated agents has finally matured. Three protocols changed everything. Model Context Protocol (MCP) by Anthropic standardizes how agents access tools and external resources. Agent-to-Agent (A2A) by Google enables peer-to-peer collaboration. ACP from IBM provides governance frameworks for enterprise deployment.

Organizations deploying multi-agent systems today are building competitive advantages that compound over time. The 2% deployment rate reflects hesitation, not technical limitations. Early adopters report 30% cost reductions and 35% productivity gains after implementation.

Conclusion

Agentic AI is the next evolution in artificial intelligence. We’re moving from systems that respond to requests to autonomous agents that pursue goals, use tools, and collaborate to solve complex problems. The frameworks, protocols, and best practices are mature enough for production deployment now.

The journey from traditional AI to agentic systems mirrors the broader evolution of computing. Calculators became computers. Programs became platforms. Tools are becoming teammates. As agents become more capable and trustworthy, they’ll handle increasingly sophisticated workflows, freeing humans to focus on strategy, creativity, and judgment.

Success in this era requires understanding not just how to build agents, but how to orchestrate them effectively, monitor their behavior, and integrate them into existing operations. The organizations that master these skills will gain advantages in efficiency, scalability, and innovation.

Start with one low-risk workflow this month. Pick a framework that matches your team’s skills and business needs. Build, test, iterate, and scale based on real results.