Physical AI Meets Spatial Computing: The VR+ Revolution

The next technological revolution isn’t happening in the cloud or on our screens. It’s unfolding in the space between digital and physical worlds, where artificial intelligence gains a body and virtual reality becomes indistinguishable from lived experience.

Mark Zuckerberg wasn’t wrong about the metaverse. He was early. While critics declared the metaverse dead after Meta’s Reality Labs accumulated nearly $70 billion in losses, three technological currents were quietly converging beneath the surface: Physical AI, spatial computing, and next-generation VR+. Together, they’re changing how we work, train, collaborate, and interact with both digital and physical environments.

This isn’t about cartoon avatars wandering through empty virtual shopping malls. This is about AI systems that can perceive, reason about, and manipulate the physical world in real time. Machines that understand three-dimensional space. Immersive experiences where the boundary between virtual and real dissolves.

Welcome to the VR+ revolution, where physical meets digital and intelligence becomes embodied.

The Rise of Physical AI: When Intelligence Gets a Body

For years, artificial intelligence lived in the digital realm. Large language models could write essays, generate code, and hold conversations, but they couldn’t pick up a coffee cup or navigate a factory floor. That’s changing fast.

Physical AI is AI that can perceive, understand, and interact with the physical world in real time. Unlike traditional AI systems confined to digital environments, Physical AI shows up in robots, autonomous vehicles, sensor systems, and the spatial computing platforms powering next-generation VR and AR.

The technical foundation combines several technologies. Vision-language-action (VLA) models integrate computer vision, natural language processing, and motor control. Like the human brain processing sensory input and coordinating movement, VLA models help machines bridge perception and action.

Neural processing units (specialized processors for edge computing) enable low-latency, energy-efficient AI processing directly on devices. Physical AI systems can run sophisticated models, process high-speed sensor data, and make split-second decisions without cloud dependency. For autonomous vehicles navigating city streets or surgical robots performing delicate procedures, milliseconds matter.

Training methods have evolved too. Reinforcement learning lets robots develop behaviors through trial and error. Imitation learning lets them mimic expert demonstrations. Most powerfully, these approaches work in simulated environments first, where robots can fail safely millions of times before touching the physical world.

NVIDIA’s Omniverse platform trains robots on countless scenarios in virtual environments that obey physical laws. A robot can learn to grasp objects of varying shapes, weights, and materials, navigate obstacles, and recover from failures, all in simulation, before deployment in a warehouse or factory.

Physical AI is the intelligence layer powering the next generation of spatial computing. It lets virtual environments respond to physical inputs with new levels of realism and lets mixed reality systems understand and interact with the real world.

Spatial Computing: Mapping the Bridge Between Worlds

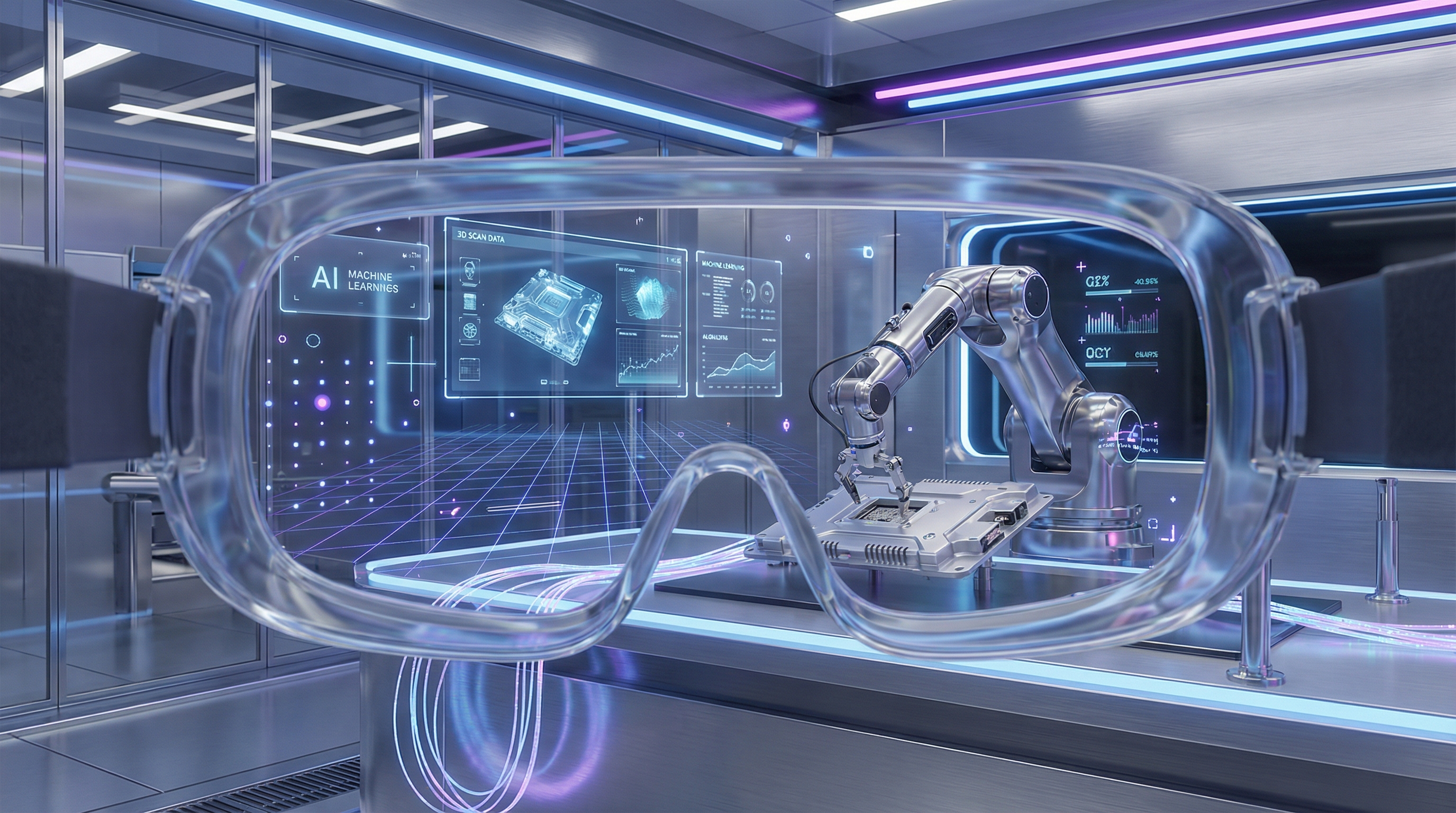

If Physical AI provides the intelligence, spatial computing provides the map. Spatial computing is technology that lets computers understand, navigate, and interact with three-dimensional space. It’s how machines and humans share a common understanding of physical environments.

Spatial computing combines several capabilities: real-time 3D mapping and reconstruction, precise tracking of objects and people in space, understanding of spatial relationships and physics, and overlaying digital information onto physical environments. These capabilities matter for both autonomous robots navigating warehouses and users experiencing immersive VR.

Spatial computing has evolved quickly. Apple’s Vision Pro, despite its $3,499 price tag and mixed commercial reception, showed what’s possible. Its passthrough cameras, hand tracking, and spatial audio made digital objects feel present in physical space. Meta’s Quest 3, at a fraction of the price, brought similar capabilities to more people.

Spatial computing goes beyond consumer headsets. In industrial settings, it powers digital twins (virtual replicas of physical systems that update in real time based on sensor data). Siemens’ PAVE360 platform uses spatial computing to create digital models of mobility and energy systems. Engineering teams can design, simulate, and validate smart infrastructure before any physical construction begins.

When spatial computing meets Physical AI, something interesting happens. Robots can perceive three-dimensional space with the same fidelity as spatial computing systems. VR environments can respond to physical inputs with Physical AI intelligence. The boundary between digital and physical starts to blur.

Consider a partnership announced in November 2025: Butterfly Network, maker of handheld ultrasound devices, signed a five-year co-development agreement with Midjourney, the AI image-generation company. Midjourney paid $15 million upfront and $10 million annually for access to Butterfly’s ultrasound-on-chip platform.

Why would an AI art company need ultrasound technology? Because Butterfly’s chip isn’t just a medical device. It’s a spatial sensor that can perceive depth, motion, and structure through surfaces in ways cameras cannot. For Midjourney’s vision of generating “holodeck-like” immersive environments, this sensing capability could let VR systems perceive the physical world with new richness, blending acoustic, visual, and spatial data to create responsive, intelligent virtual spaces.

The Catalysts: What’s Driving the Convergence

Three technological catalysts are accelerating the convergence of Physical AI and spatial computing into next-generation VR+ experiences.

Generative AI and Real-Time World Building

Generative AI has transformed what’s possible in virtual environments. Midjourney and OpenAI’s Sora can now create photorealistic scenes that increasingly obey physical laws. But the real breakthrough comes from spatial intelligence models like those developed by Fei-Fei Li’s World Labs.

World Labs describes itself as “a spatial intelligence company, building frontier models that can perceive, generate and interact with the 3D world.” Its first product, Marble, turns text, photos, and video into persistent, editable 3D environments. Li argues that “world models must be able to generate worlds consistent in perception, geometry and physics,” with generative AI maintaining spatial coherence over time.

This changes VR development from manually modeling every object and environment to a more dynamic approach where AI can generate, adapt, and personalize virtual spaces in real time. Instead of pre-rendered worlds, we’re moving toward responsive environments that adapt to user needs, preferences, and actions.

Advanced Sensing and Multimodal Perception

Next-generation Physical AI systems don’t just “see” through ordinary cameras. They consume multimodal imaging: visible light, infrared, ultrasound, LiDAR, and other sensing modalities. This rich sensory input creates more sophisticated understanding of physical environments.

For VR+ systems, this means virtual environments can be grounded in detailed understanding of physical space. Mixed reality experiences can blend digital objects with physical ones because the system understands surfaces, lighting, occlusion, and spatial relationships with precision. The result is immersion that feels natural rather than forced.

Edge Computing and Onboard Intelligence

The shift from cloud-dependent to edge-based AI processing has real implications. When VR headsets, AR glasses, and robots can run sophisticated AI models locally, latency drops, privacy improves, and experiences become more responsive.

Edge AI chips now deliver real-time inference and low-power physical sensing in small packages. This lets standalone VR headsets perform complex spatial mapping, hand tracking, and environment understanding without tethering to powerful computers. It also enables the lightweight AR glasses that are rapidly gaining market share, offering spatial computing capabilities in socially acceptable form factors.

From Prototype to Production: Real-World Applications

Physical AI and spatial computing aren’t theoretical. Organizations across industries are deploying these technologies at scale, moving from experimental pilots to production systems that deliver measurable value.

Manufacturing and Industrial Operations

BMW is integrating AI automation across its factories globally. In one deployment, BMW uses autonomous vehicle technology (assisted by sensors, digital mapping, and motion planners) to let newly built cars drive themselves from the assembly line through testing to the factory’s finishing area, all without human assistance.

The company is also testing humanoid robots at its South Carolina factory for tasks requiring dexterity that traditional industrial robots lack: precision manipulation, complex gripping, and two-handed coordination. These robots, powered by Physical AI, can adapt to variations in parts, recover from errors, and work alongside human employees safely.

Amazon recently deployed its millionth robot, part of a diverse fleet working alongside humans in fulfillment centers. Its DeepFleet AI model coordinates the movement of this massive robot army across the entire fulfillment network, improving robot fleet travel efficiency by 10% while reducing congestion and accidents.

Training and Simulation

VR’s killer application may be training. Walmart has onboarded over one million associates using VR simulations that replicate real-world, high-pressure retail scenarios, including the intensity of Black Friday crowds. By partnering with VR training platforms like Strivr, Walmart provides employees with repeatable, safe practice in scenarios that would be impossible or expensive to recreate physically.

Boeing is revolutionizing aerospace training with its Virtual Airplane platform, a dynamic 3D environment where engineers, mechanics, and pilots can interact with highly detailed aircraft models. This immersive training tool lets users explore the airplane’s internal systems in real-time, improving knowledge retention and operational readiness while cutting the need for physical prototypes.

The training advantage goes beyond cost savings. VR training provides instant feedback, allows unlimited repetition, enables scenario variation, and generates analytics on performance. Organizations can identify knowledge gaps, track improvement, and ensure consistent training quality across global operations.

Healthcare and Medical Applications

Physical AI and spatial computing are transforming healthcare delivery. GE HealthCare is building autonomous X-ray and ultrasound systems with robotic arms and machine vision technologies that can position themselves, adjust for patient anatomy, and capture optimal images with minimal human intervention.

In surgery, AI-driven robotic systems are becoming more sophisticated. These systems don’t just follow preprogrammed motions. They perceive tissue characteristics, adapt to anatomical variations, and provide surgeons with enhanced precision and control. VR training systems allow surgeons to practice complex procedures repeatedly in realistic simulations before operating on patients.

Remote Collaboration and Design

In a globalized world where teams are distributed across geographies, VR and spatial computing offer natural “shared spaces.” Designers, architects, engineers, and product teams can meet inside virtual studios, inspect 3D models, make changes collaboratively, walk around building prototypes, or simulate user journeys.

This spatial collaboration is more intuitive than screen sharing because it supports depth, scale, and natural spatial relationships. When combined with Physical AI that can understand gestures, interpret intent, and adapt environments dynamically, these collaborative spaces become productive work environments rather than novelties.

The 2025-2026 Inflection Point: Market Signals and Momentum

2025 was a turning point for the immersive technology ecosystem. Market shifts, hardware innovations, and strategic realignments changed how the industry views the future of VR, AR, and spatial computing.

According to International Data Corporation (IDC), the global AR/VR headset market grew 18.1% year-over-year in Q1 2025. But the real story is in the composition of that growth. While traditional VR headsets remained relevant, the momentum came from optical see-through smart glasses and mixed reality devices. Vendors like XREAL and Viture saw rapid growth. Viture posted a 268.4% year-on-year increase.

This shift reflects changing user preferences. Consumers and enterprises increasingly favor lighter, more wearable, socially acceptable devices that blend virtual content with real-world awareness. These devices work for everyday use, enterprise adoption, and hybrid workflows. Fully enclosed VR headsets struggle with these use cases.

Despite Meta’s continued dominance in VR hardware (holding approximately 50.8% market share), global VR headset shipments declined 12% in 2024. The challenges are familiar: hardware cost, headset weight, battery life, heat, limited compelling non-gaming content, and comfort issues. In response, major players are pivoting toward lighter smart glasses, MR/XR platforms, and “AI glasses” that augment reality with intelligent capabilities.

The market forecasts are interesting. Combined AR/VR and smart-glasses shipments reached approximately 14.3 million units in 2025, fueled by growth in lightweight XR wearables. While traditional VR may be plateauing, the broader immersive market is accelerating.

Behind the hardware shifts, fundamental technology advances are changing what’s possible. Research breakthroughs in 2025 demonstrated early success blending generative AI, real-time avatar reconstruction, and hybrid passthrough VR/MR experiences. Cloud-based VR streaming leveraging real-time rendering and adaptive streaming gained viability, lowering barriers for high-fidelity immersive experiences without requiring expensive local hardware.

Industry analysts expect 2026 to be pivotal. As XR devices become lighter and more capable, as AI-powered content generation reduces development costs, and as enterprise use cases mature, adoption should accelerate. The technology is crossing from “interesting experiment” to “strategic capability.”

The Future: Post-Virtual and Beyond

Where does this convergence lead? The trajectory points toward experiences that challenge our assumptions about reality and presence.

Yann LeCun, after a decade leading Meta’s FAIR lab, is launching a startup focused on “a new paradigm of AI architectures” capable of understanding physical worlds, reasoning over time, and executing complex actions. Fei-Fei Li’s World Labs is building spatial intelligence models that can perceive, generate, and interact with 3D environments. These pioneers are building AI systems that understand physical space as naturally as humans do.

When you combine LeCun’s Physical AI with Li’s spatial intelligence and apply them to VR+, an interesting future emerges. Virtual environments won’t just look realistic. They’ll behave realistically, responding to physical laws, adapting to user actions, and generating content dynamically based on context and need.

This leads to what neuroscientist Moran Cerf and innovation strategist Robert Wolcott call “Post-Virtual,” the point where we cannot distinguish VR from default reality. Post-Virtual is the hard Turing Test for VR, a threshold likely to be surpassed later this century.

Before we reach Post-Virtual, several intermediate milestones will reshape industries and daily life:

Humanoid Robots and Embodied AI

The intersection of agentic AI systems with Physical AI robotics will produce robots whose “brains” are sophisticated AI agents. Instead of custom robotics for each domain, general agentic modules will be reused across warehouses, homes, healthcare, agriculture, and other sectors.

UBS estimates that by 2035, there will be 2 million humanoid robots in the workplace, increasing to 300 million by 2050. The total addressable market could reach $30-50 billion by 2035 and $1.4-1.7 trillion by 2050. As manufacturing costs drop (Goldman Sachs reports humanoid manufacturing costs fell 40% between 2023 and 2024), these robots will become economically viable for broader applications.

Quantum Robotics and Exotic Form Factors

Looking further ahead, quantum robotics (the combination of quantum computing and AI-powered robotics) holds potential. Quantum algorithms could enable robots to operate at speeds impossible for binary computers, with quantum sensors enhancing perception and interaction capabilities.

Meanwhile, researchers are experimenting with machines that blur biological lines: robots powered by living tissue, machines that transition between solid and liquid states using magnetic fields, and systems that integrate living organisms into mechanical frameworks. These exotic form factors remain largely experimental but show how we’re rethinking the relationship between artificial and biological systems.

Navigating Challenges and Seizing Opportunities

The convergence of Physical AI and spatial computing creates opportunities, but real challenges remain.

Technical Hurdles

The gap between simulated and real-world performance continues to challenge developers. As Ayanna Howard, dean of engineering at Ohio State University, notes: “Visual images in simulated environments are pretty good, but the real world has nuances that look different. A robot might learn to grab something in simulation, but when it enters physical space, it’s not a one-to-one match.”

Advances in physics engines, synthetic data generation, and hybrid training approaches are narrowing this gap, but achieving the quality of physical training at the scale and safety of simulation remains an active research challenge.

Safety and Trust

Physical AI systems that manipulate the physical world carry real risks. Small error rates can have cascading effects, potentially leading to production waste, equipment damage, or safety incidents. As these systems move from controlled factory environments into public spaces, comprehensive safety strategies integrating regulatory compliance, risk assessments, and continuous monitoring become essential.

Trust is equally critical. Research shows a gap between stated trust and behavioral trust. People often say they don’t trust AI but behave as if they do. With physical embodiments of AI, this behavioral overtrust becomes dangerous because these systems apply physical forces with potentially irreversible consequences.

Privacy and Ethics

As VR/XR and AI converge, issues around privacy, data collection, environment scanning, gaze tracking, and biometric data will intensify. Developers and companies must adopt transparent data policies, user consent practices, privacy-by-design principles, and ethical guidelines to build trust.

The data these systems collect is extraordinarily intimate. Not just what you look at, but how long you look, where your attention wanders, how your body responds. Clear boundaries and meaningful user control will be essential for mainstream adoption.

Regulatory Complexity

Companies must navigate overlapping and sometimes contradictory requirements across jurisdictions. As robots and AI systems move into public spaces, regulatory bodies are developing new frameworks for safety certification, liability, and operational oversight. Organizations that engage proactively with regulators and help shape sensible standards will have advantages over those that wait for regulations to be imposed.

Conclusion: The Revolution Begins Now

Physical AI and spatial computing are converging right now, in factories and warehouses, hospitals and training centers, design studios and research labs around the world.

Mark Zuckerberg’s vision of the metaverse wasn’t wrong. It was premature. The missing ingredients weren’t better graphics or cheaper headsets. They were intelligence, spatial understanding, and the ability to bridge digital and physical worlds. Those ingredients are coming together now.

Over the next three to five years, VR+ will emerge transformed by generative AI, Physical AI, and spatial computing. Virtual environments will become responsive and intelligent. Robots will move from scripted automation to adaptive intelligence. Mixed reality will blend digital and physical so seamlessly that the distinction becomes less meaningful.

For business leaders, the path forward is clear: start experimenting with spatial computing, Physical AI, and immersive technologies. The organizations that learn fastest, that develop institutional knowledge and technical capabilities early, will help shape this new medium and capture disproportionate value.

For developers and creators, the opportunity is real. The tools, platforms, and capabilities to build the next generation of immersive, intelligent experiences exist today. The question isn’t whether this future will arrive. It’s who will build it.

The VR+ revolution is here. What you do with it is up to you.